Wow, it’s been a long time since I had hardware that awful.

My old NAS was a Phenom II x4 from 2009, and I only retired it a year and a half ago when I upgraded my PC. But I put 8GB RAM into that since it was a 64-bit processor (could’ve put up to 32GB I think, since it had 4 DDR3 slots). My NAS currently runs a Ryzen 1700, but I still have that old Phenom in the closet in case that Ryzen dies, but I prefer the newer HW because it’s lower power.

That said, I once built a web server on an Arduino which also supported websockets (max 4 connections). That was more of a POC than anything though.

Oldest I got is limited to 16GB (excluding rPis). My main desktop is limited to 32GB which is annoying, because I sometimes need more. But, I have a home server with 128GB of RAM that I can use when it’s not doing other stuff. I once needed more than 128GB of RAM (to run optimizations on a large ONNX model, iirc), so had to spin up an EC2 instance with 512GB of RAM.

The beauty of self hosting is most of it doesn’t actually require that much compute power. Thus, it’s a perfect use for hardware that is otherwise considered absolutely shit. That hardware would otherwise go in the trash. But use it to self host, and in most cases it’s idle most of the time so it doesn’t use much power anyway.

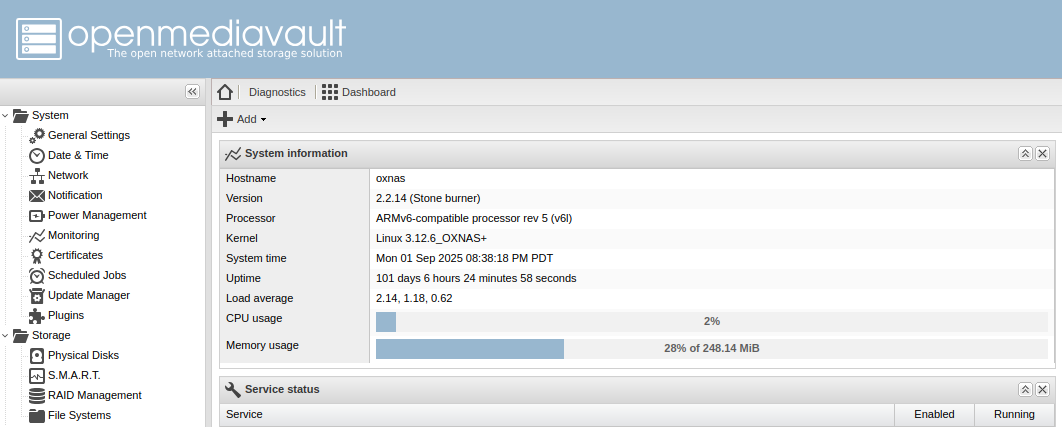

Does this count ARMv6 256MB RAM running OpenMediaVault…hmm I have to fix my clock. LOL

I just upgraded to a Xeon E5 v4 processor.

I think the max RAM on it is about 1.5 TiB per processor or something.

It’s not new, but it’s not that old either. Still cost me a pretty penny.

your hardware ain’t shit until it’s a first gen core2duo in a random Dell office PC and 2gb of memory that you specifically only use just because it’s a cheaper way to get x86 when you can’t use your raspberry pi.

Also they lie most of the time and it may technically run fine on more memory, especially if it’s older when dimm capacities were a lot lower than they can be now. It just won’t be “supported”.

Maybe not shit, but exotic at that time, year 2012.

The first Raspberry Pi, model B 512 MB RAM, with an external 40 GB 3.5" HDD connected to USB 2.0.It was running ARM Arch BTW.

Next, cheap, second hand mini desktop Asus Eee Box.

32 bit Intel Atom like N270, max. 1 GB RAM DDR2 I think.

Real metal under the plastic shell.

Could even run without active cooling (I broke a fan connector).I have one of these that I use for Pi-hole. I bought it as soon as they were available. Didn’t realise it was 2012, seemed earlier than that.

This was my media server and kodi player for like 3 years…still have my Pi 1 lying around. Now I have a shitty Chinese desktop I built this year with i5 3rd. Gen with 8gb ram

What’re you hosting on them?

Mainly telemetry, like temperature inside, outside.

Script to read a data and push it into a RRD, later PostreSQL.

ligthttpd to serve static content, later PHP.Once it served as a bridge, between LAN and LTE USB modem.

7 websites, Jellyfin for 6 people, Nextcloud, CRM for work, email server for 3 domains, NAS, and probably some stuff I’ve forgotten on a $4 computer from a tiny thrift store in BFE Kansas. I’d love to upgrade, but I’m always just filled with joy whenever I think of that little guy just chugging along.

Hell yeah, keep chugging little guy 🤘

Interested in how it does jellyfin, decent GPU or something else?

It does fine. It’s an i5-6500 running CPU transcoding only. Handles 2-3 concurrent 1080p streams just fine. Sometimes there’s a little buffering if there’s transcoding going on. I try to keep my files at 1080p for storage reasons though. This thing’s not going to handle 4k transcoding very well, but it does okay if you don’t expect too much from it.

I’m skeptical that you are doing much video transcoding anyway. 1080p is supported on must devices now, and h264 is best buddies with 1080p content - a codec supported even on washing machines. Audio may be transcoded more often.

Most of my content is h265 and av1 so I assume they are also facing a similar issue. I usually use the jellyfin app on PC or laptop so not an issue but my family members usually use the old TV which doesn’t support it.

AV1 is definitely a showstopper a lot of the time indeed. H265 I would expect to see more on 2k or 4k content (though native support is really high anyway). My experience so far has been seeing transcoding done only becuase the resolution is unsupported when I try watching 4k videos on an older 1080p only chromecast.

What do you mean by showstopper? I only encode my shows into AV1/opus and I never had any transcoding happening on any of my devices.

It’s well supported on any recent Browser compared to x264/x265… specially 10bit encodes. And software decoding is nearly present on any recent device.

Dunno about 4k though, I haven’t the necessary screen resolution to play any 4k content… But for 1080p, AV1 is the way to go IMO.

- Free open/source

- Any browser supported

- Better compression

- Same objective quality with lower bitrate

- A lot of cool open source project arround AV1

It has it’s own quirks for sure (like every codec) but it’s far from a bad codec. I’m not a specialist on the subject but after a few months of testing/comparing/encoding… I settled with AV1 because it was comparative better than x264/x265.

Showstopper in the sense that it may not play natively and require transcoding. While x264 has pretty much universal support, AV1 does not… at least not on some of my devices. I agree that it is a good encoder and the way forward but its not the best when using older devices. My experience has been with Chromecast with Google TV. Looks like google only added AV1 support in their newest Google TV Streamer (late 2024 device).

Not a huge amount of transcoding happening, but some for old Chromecasts and some for low bandwidth like when I was out of the country a few weeks ago watching from a heavily throttled cellular connection. Most of my collection is h264, but I’ve got a few h265 files here and there. I am by no means recommending my setup as ideal, but it works okay.

Absolutely, whatever works for you. I think its awesome to use the cheapest hardware possible to do these things. Being able to use a media server without transcoding capabilities? Brilliant. I actually thought you’d probably be able to get away with no transcoding at all since 1080p has native support on most devices and so does h264. In the rare cases, you could transcode beforehand (like with a script whenever a file is added) so you’d have an appropriate format on hand when needed.

Heck yeah

Which CRM please?

EspoCRM. I really like it for my purposes. I manage a CiviCRM instance for another job that needs more customization, but for basic needs, I find espo to be beautiful, simple, and performant.

Sweeeeet thank you! Demo looks great. Now to figure out whether an uber n00ber can self host it in a jiffy or not. 🙏

3x Intel NUC 6th gen i5 (2 cores) 32gb RAM. Proxmox cluster with ceph.

I just ignored the limitation and tried with a single sodim of 32gb once (out of a laptop) and it worked fine, but just backed to 2x16gb dimms since the limit was still 2core of CPU. Lol.

Running that cluster 7 or so years now since I bought them new.

I suggest only running off shit tier since three nodes gives redundancy and enough performance. I’ve run entire proof of concepts for clients off them. Dual domain controllers and FC Rd gateway broker session hosts fxlogic etc. Back when Ms only just bought that tech. Meanwhile my home “ARR” just plugs on in docker containers. Even my opnsense router is virtual running on them. Just get a proper managed switch and take in the internet onto a vlan into the guest vm on a separate virtual NIC.

Point is, it’s still capable today.

How is ceph working out for you btw? I’m looking into distributed storage solutions rn. My usecase is to have a single unified filesystem/index, but to store the contents of the files on different machines, possibly with redundancy. In particular, I want to be able to upload some files to the cluster and be able to see them (the directory structure and filenames) even when the underlying machine storing their content goes offline. Is that a valid usecase for ceph?

I’m far from an expert sorry, but my experience is so far so good (literally wizard configured in proxmox set and forget) even during a single disk lost. Performance for vm disks was great.

I can’t see why regular file would be any different.

I have 3 disks, one on each host, with ceph handling 2 copies (tolerant to 1 disk loss) distributed across them. That’s practically what I think you’re after.

I’m not sure about seeing the file system while all the hosts are all offline, but if you’ve got any one system with a valid copy online you should be able to see. I do. But my emphasis is generally get the host back online.

I’m not 100% sure what you’re trying to do but a mix of ceph as storage remote plus something like syncthing on a endpoint to send stuff to it might work? Syncthing might just work without ceph.

I also run zfs on an 8 disk nas that’s my primary storage with shares for my docker to send stuff, and media server to get it off. That’s just truenas scale. That way it handles data similarly. Zfs is also very good, but until scale came out, it wasn’t really possible to have the “add a compute node to expand your storage pool” which is how I want my vm hosts. Zfs scale looks way harder than ceph.

Not sure if any of that is helpful for your case but I recommend trying something if you’ve got spare hardware, and see how it goes on dummy data, then blow it away try something else. See how it acts when you take a machine offline. When you know what you want, do a final blow away and implement it with the way you learned to do it best.

Not sure if any of that is helpful for your case but I recommend trying something if you’ve got spare hardware, and see how it goes on dummy data, then blow it away try something else.

This is good advice, thanks! Pretty much what I’m doing right now. Already tried it with IPFS, and found that it didn’t meet my needs. Currently setting up a tahoe-lafs grid to see how it works. Will try out ceph after this.

Odd, I have a Celeron J3455 which according to Intel only supports 8GB, yet I run it with 16 GB

Same here in a Synology DS918+. It seems like the official Intel support numbers can be a bit pessimistic (maybe the higher density sticks/chips just didn’t exist back when the chip was certified?)

I used to self host some stuff on an old 2011 iMac. Worked fine, actually

Got all my docker containers on an i3-4130T. It’s fine.

I had quite a few docker containers going on a Raspberry Pi 4. Worked fine. Though it did have 8GB of RAM to be fair

I’m hosting a minio cluster on my brother-in-law’s old gaming computer he spent $5k on in 2012 and 3 five year old mini-pcs with 1tb external drives plugged into them. Works fine.

Testing federation from my shit hardware… 😅

Looks like it works! Congrats!

Not seeing other comments… but see this over at .world

It’s not absolutely shit, it’s a Thinkpad t440s with an i7 and 8gigs of RAM and a completely broken trackpad that I ordered to use as a PC when my desktop wasn’t working in 2018. Started with a bare server OS then quickly realized the value of virtualization and deployed Proxmox on it in 2019. Have been using it as a modest little server ever since. But I realize it’s now 10 years old. And it might be my server for another 5 years, or more if it can manage it.

In the host OS I tweaked some value to ensure the battery never charges over 80%. And while I don’t know exactly how much electricity it consumes on idle, I believe it’s not too much. Works great for what I want. The most significant issue is some error message that I can’t remember the text of that would pop up, I think related to the NIC. I guess Linux and the NIC in this laptop have/had some kind of mutual misunderstanding.

Solid. My backup is a T440p, and behind that a X230, fucking bulletproof.

Yeah, absolutely. Same here, I find used laptops often make GREAT homelab systems, and ones with broken screens/mice/keyboards can be even better since you can get them CHEAP and still fully use them.

I have 4 doing various things including one acting as my “desktop” down in the homelab. But they’re between 4 and 14 years old and do a great job for what they’re used for.

@ripcord @GnuLinuxDude The lifecycle of my laptops:

- years 1-5: I use them.

- years 5-10: my kids use them (generally beating the crap out of them, covering them in boogers/popsicle juice, dropping them, etc).

- years 10-15: low-power selfhosted server which tucks away nicely, and has its own screen so that when something breaks I don’t need to dig up an hdmi cable and monitor.

EDIT: because the OP asks for hardware: my current backup & torrent machine is a 4th gen i3 latitude e7240.