Some of us still do 🙃

If it ain’t broke, don’t fix it.

deleted by creator

Anybody that actually professionally deals with this kind of thing understands just how wrong you are.

deleted by creator

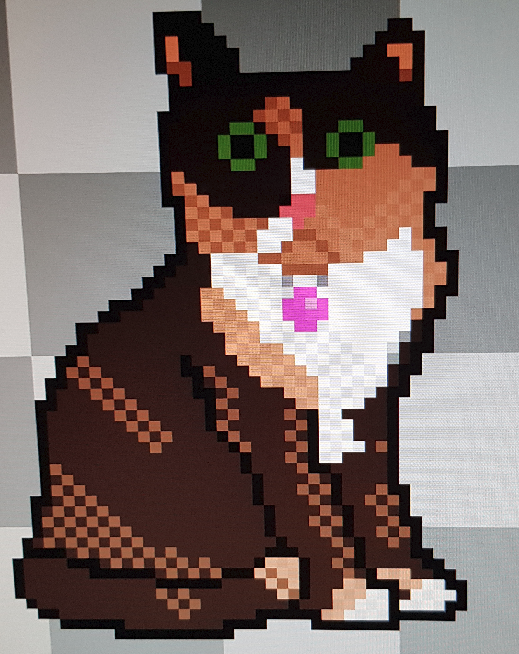

This application looks fine to me.

Clearly labeled sections.

Local on one side, remote on the other

Transfer window on bottom.

No space for anything besides function, is the joke going over my head?

The large .war (Web ARchive) being uploaded monolithicly is the archaic deployment of a web app. Modern tools can be much better.

Of course, it’s going to be difficult to find a modern application where each individually deployed component isn’t at least 7MB of compiled source (and 50-200MB of container), compared to this single 7MB

warthat contained everything.And then confused screaming about all the security holes.

This application looks fine to me.

Clearly labeled sections.

Local on one side, remote on the other

Transfer window on bottom

Thats how you know its old. Its not caked full of ads, insanely locked down, and trying yo sell you a subscription service.

It even has questionably-helpful mysterious blinky lights at the bottom right which may or may not do anything useful.

Except that FileZilla does come with bundled adware from their sponsors and they do want you to pay for the pro version. It probably is the shittiest GPL-licensed piece of software I can think of.

https://en.wikipedia.org/wiki/FileZilla#Bundled_adware_issues

Aw that sucks

deleted by creator

The joke isn’t the program itself, it’s the process of deploying a website to servers.

I’m sure there’s nothing wrong with the program at all =)

Modern webapp deployment approach is typically to have an automated continuous build and deployment pipeline triggered from source control, which deploys into a staging environment for testing, and then promotes the same precise tested artifacts to production. Probably all in the cloud too.

Compared to that, manually FTPing the files up to the server seems ridiculously antiquated, to the extent that newbies in the biz can’t even believe we ever did it that way. But it’s genuinely what we were all doing not so long ago.

webapp deployment

Huh? Isn’t this something that runs on the server?

It’s good practice to run the deployment pipeline on a different server from the application host(s) so that the deployment instances can be kept private, unlike the public app hosts, and therefore can be better protected from external bad actors. It is also good practice because this separation of concerns means the deployment pipeline survives even if the app servers need to be torn down and reprovisioned.

Of course you will need some kind of agent running on the app servers to be able to receive the files, but that might be as simple as an SSH session for file transfer.

But it’s genuinely what we were all doing not so long ago

Jokes on you, my first job was editing files directly in production. It was for a webapp written in Classic ASP. To add a new feature, you made a copy of the current version of the page (eg

index2_new.aspbecameindex2_new_v2.asp) and developed your feature there by hitting the live page with your web browser.When you were ready to deploy, you modified all the other pages to link to your new page

Good times!

manually FTPing the files up to the server seems ridiculously antiquated

But … but I do that, and I’m only 18 :(

Like anything else, it’s good to know how to do it in many different ways, it may help you down the line.

In production in an oddball environment, I have a python script to ftp transfer to a black box with only ftp exposed as an option.

Another system rebuilds nightly only if code changes, publishing to a QC location. QC gives it a quick review (we are talking website here, QC is “text looks good and nothing looks weird”), clicks a button to approve, and it gets published the following night.

I’ve had hardware (again, black box system) where I was able to leverage git because it was the only command exposed. Aka, the command they forgot to lock down and are using to update their device. Their intent was to sneakernet a thumb drive over to it for updates, I believe in sneaker longevity and wanted to work around that.

So you should know how to navigate your way around in FTP, it’s a good thing! But I’d also recommend learning about all the other ways as well, it can help in the future.

(This comment brought to you by “I now feel older for having written it”, and “I swear I’m only in my fourties,”)

Not to rub it in, but in my forties could be read as almost the entirety of the modern web was developed during my adulthood.

It could, but I’m in my early 40s.

I just started early with a TI-99/4A, then a 286, before building my own p133.

So the “World Wide Web!” posters were there for me in middle school.

Still old lol

Think of this like saying using a scythe to mow your lawn is antiquated. If your lawn is tiny then it doesn’t really matter. But we’re talking about massive “enterprise scale” lawns lol. You’re gonna want something you can drive.

Old soul :)

It’s perfectly fine for some private page etc. but when you make business software for customers that require 99,9% uptime with severe contractual penalties it’s probably too wonky.

That’s probably okay! =) There’s some level of pragmatism, depending on the sort of project you’re working on.

If it’s a big app with lots of users, you should use automation because it helps reliability.

If there are lots of developers, you should use automation because it helps keep everyone organised and avoids human mistakes.

But if it’s a small thing with a few devs, or especially a personal project, it might be easier to do without :)

Then switch to use sth more like scp ASAP? :-)

Nah, it’s probably more efficient to .tar.xz it and use netcat.

On a more serious note, I use sftp for everything, and git for actual big (but still personal) projects, but then move files and execute scripts manually.

And also, I cloned my old Laptops /dev/sda3 to my new Laptops /dev/main/root (on /dev/mapper/cryptlvm) via netcat over a Gigabit connection with netcat. It worked flawlessly. I love Linux and its Philosophy.

Ooh I’ve never heard of it. netcat I mean, cause I’ve heard of Linux 😆.

The File Transfer Protocol is just very antiquated, while scp is simple. Possibly netcat is too:-).

Netcat is basically just a utility to listen on a socket, or connect to one, and send or receive arbitrary data. And as, in Linux, everything is a file, which means you can handle every part of your system (eg. block devices [physical or virtual disks]) like a normal file, i.e. text, you can just transfer a block device (e.g. /dev/sda3) over raw sockets.

deleted by creator

Yes, exactly that.

Shitty companies did it like that back then - and shitty companies still don’t properly utilize what easy tools they have available for controlled deployment nowayads. So nothing really changed, just that the amount of people (and with that, amount of morons) skyrocketed.

I had automated builds out of CVS with deployment to staging, and option to deploy to production after tests over 15 years ago.

after tests

What is “tests”?

Tests is the industry name for the automated paging when production breaks

Promotes/deploys are just different ways of saying file transfer, which is what we see here.

Nothing was stopping people from doing cicd in the old days.

Sure, but having a hands-off pipeline for it which runs automatically is where the value is at.

Means that there’s predictability and control in what is being done, and once the pipeline is built it’s as easy as a single button press to release.

How many times when doing it manually have you been like “Oh shit, I just FTPd the WRONG STUFF up to production!” - I know I have. Or even worse you do that and don’t notice you did it.

Automation takes a lot of the risk out.

Not to mention the benefits of versioning and being able to rollback! There’s something so satisfying about a well set-up CI/CD pipeline.

We did versioning back in the day too. $application copied to $application.old

But was $application.old_final the one to rollback to, or $application.old-final2?!

I remember joining the industry and switching our company over to full Continuous Integration and Deployment. Instead of uploading DLL’s directly to prod via FTP, we could verify each build, deploy to each environment, run some service tests to see if pages were loading, all the way up to prod - with rollback. I showed my manager, and he shrugged. He didn’t see the benefit of this happening when, in his eyes, all he needed to do was drag and drop, and load the page to make sure all is fine.

Unsurprisingly, I found out that this is how he builds websites to this day…

I deploy my apps with SFTP command line .

Did it for the first time two years ago. It was for my parent’s business website. I see nothing wrong with this method.

I used CuteFTP, but I am a gentleman

“Felt cute, might transfer files later, idk”

I am currently updating a minecraft server soooooo

if your hosting provider 1) is not yourself and 2) requires you to use anything like filezilla, get a new hosting provider

Why?

Unless you’re using Linode or something other general purpose VPS which you have installed a Minecraft server onto, having to use anything other than a WebUI to exchange files with the server really strikes me as sketchy. A dedicated can’t-run-anything-else Minecraft hosting provider even giving random users SSH access is sketchy enough but requiring you to use it to update the game… that level of not having an IT guy is just a security nightmare waiting to happen.

Guessing by your comment that you’ve actually rented a general purpose Linux VPS and not gotten suckered into Honest Pete’s Discount CreeperHost. In that case, carry on.

Tbf I think BisectHost now lets me just upload normally, but I’m so used to using Filezilla I’m doing so out of habit.

That’s fair actually. I was more worried about Minecraft hosts that allow you to upload your own game executable (which I sincerely hope don’t exist)

The last time I’ve rented a Minecraft server was probably over 10 years ago, and as far as I can tell, at the time having to upload stuff through ftp was normal.

Although reading this thread has also taught me that I know nothing about how deployment works and I need to catch up on that.

FileZilla isn’t even that old school, cuteftp was the OG one afaik.

Yeah, I used to use filezilla and I’m not that old… Right? …Right?

Sure, grandpa/grandma, time for your medicine.

No way, WS_FTP was more OG.

I remember WS_FTP LE leaving log files everywhere. What a pain to clean up.

Yeah you’re totally right, I forgot about that.

There was flashfxp too but I think that was a fair bit later. Revolutionized being a warez courier.

I’m not FBI

being a warez courier.

Spill. You bring those R5s across the ocean? Send audio from the handicap audio jack at the multiplex? Hustle up some telecines? Sneak Battletoads outta the backroom at GameStop before it hit shelves?

Back in the day (mid/late 90’s), there were private ftp servers that required a ratio. Some of these were run by release groups and hard to get on, some were more public. Couriers would download from one site and upload to another to build their ratio and get access to the good sites.

Before people figured out you could connect two ftp servers together directly, you would have to download to your computer and reupload. Most people were on dialup, so that was a non trivial time commitment.

Ohhh didn’t know about that sense of the word in that context. Interesting!

Do you have any idea what the warez scene is like today?

Also there was a bot on the former Warez-BB dot org that would post scene releases seemingly moments after they pre’d. Imagine those kinds of people are on Telegram or something today…

Nope, no idea what it’s like today.

The joys of having $xx/mo to reward creators. (Maybe only $.xx goes to the actual creators but still, it feels better!)

Oh god, I know all of these.

Also fuck Tim Kosse. Bundled Filezilla with malware and fucked up my machine in 2014. Had to reinstall Windows. I’ll never use it again.

I use WinSCP on Windows and Forklift on MacOS.

This is from before my times, but… Deploying an app by uploading a pre built bundle? If it’s a fully self-contained package, that seems good to me, perhaps better than many websites today…

That’s one nice thing about Java. You can bundle the entire app in one .jar or .war file (a .war is essentially the same as a .jar but it’s designed to run within a Servlet container like Tomcat).

PHP also became popular in the PHP 4.x era because it had a large standard library (you could easily create a PHP site with no third-party libraries), and deployment was simply copying the files to the server. No build step needed. Classic ASP was popular before it, and also had no build step. but it had a very small standard library and relied heavily on COM components which had to be manually installed on the server.

PHP is mostly the same today, but these days it’s JIT compiled so it’s faster than the PHP of the past, which was interpreted.

A lot are still doing that and haven’t moved up

(Please at least use SFTP!)

rsyncgang when?The year Linux takes over the desktops!

I fell like the reason nobody uses FileZila and etc anymore is because everybody that wanted it migrated to Linux already. So seriously, it already happened.

FTP Explorer all the way! Preferred that to filezilla… I mean it didn’t support sftp but I liked it.

this app uses java swing?

I never liked FileZilla. I used Cyberduck

There’s just so few decent FTP clients out there, and all of them are very ugly lol

Why make bugs with a better UI?

Why not make a better UI after ironing out the bugs?

Why make a better UI when it’ll probably introduce a slew of new bugs?

FTP isn’t really used much any more. SFTP (file transfers over SSH) mostly took over, and people that want to sync a whole directory to the server usually use rsync these days.

deleted by creator

Not when I used it like 10 years ago. Not sure about now