- 1 Post

- 48 Comments

Thank you both (@NinjaFox@lemmy.blahaj.zone, @ChaoticNeutralCzech@feddit.org) for taking the time to make this post not just more accessible but somewhat more bit-/link-rot-resilient by duplicating the image’s info as a text comment.

We don’t talk about it as much as authoritarian censorship, ip & copyright related takedowns, and their ilk, but image macros/memes often have regrettably small lifetimes as publicly accessible data in my experience. It might be for any number of reasons, including:

- because many of them are created on free generator websites that can’t afford to store every generated image forever,

- because people often share screenshots of things instead of a link to it,

- because for-profit social media websites are increasingly requiring account creation to view previously accessible content,

or (more probably) a combination of all three and more.

In any case as silly as image memes are, they’re also an important vector for keeping culture and communities alive (at least here on the fediverse). In 5-10 years, this transcription has a much higher chance of still hanging around in some instance’s backups than the image it is transcribing.

P.S.: sure, knowyourmeme is a thing, but they’re still only 1 website and

I’m not sure ifthere’s not much recent fediverse stuff thereyet. The mastodon page last updated in 2017 and conflates the software project with the mastodon.social instance (likely through a poor reading of it’s first source, a The Verge article that’s decent but was written in 2017).P.P.S.: ideally, OP (@cantankerous_cashew@lemmy.world) could add this transcription directly to the post’s alt text, but I don’t know if they use a client that makes that easy for them…

The issue isn’t just local. “This is predicted to cascade into plunging property values in communities where insurance becomes impossible to find or prohibitively expensive - a collapse in property values with the potential to trigger a full-scale financial crisis similar to what occurred in 2008,” the report stressed.

I know this isn’t the main point of this threadpost, but I think this is another way in which allowing housing to be a store of value and an investment instead of a basic right (i.e. decommodifying it) sets us up for failure as a society. Not only does it incentivize hoarding and gentrification while the number of homeless continues to grow, it completely tanks our ability to relocate - which is a crucial component to our ability to adapt to the changing physical world around us.

Think of all the expensive L.A. houses that just burned. All that value wasted, “up in smoke”. How much of those homes’ value is because of demand/supply, and how much is from their owners deciding to invest in their resale value? How much money, how much human time and effort could have been invested elsewhere over the years? Notably into the parts of a community that can more reliably survive displacement, like tools and skills. I don’t want to argue that “surviving displacement” should become an everyday focus, rather the opposite: decommodifying housing could relax the existing investment incentives towards house market value. When your ability to live in a home goes from “mostly only guaranteed by how much you can sell your current home” to “basically guaranteed (according to society’s current capabilities)”, people will more often decide to invest their money, time, and effort into literally anything else than increasing their houses’ resale value. In my opinion, this would mechanically lead to a society that loses less to forest fires and many other climate “disasters”.

I have heard that Japan almost has a culture of disposable-yet-non-fungible homes: a house is built to last its’ builders’/owners’ lifetime at most, and when the plot of land is sold the new owner will tear down the existing house to build their own. I don’t know enough to say how - or if - this ties into the archipelago’s relative overabundance of tsunamis, earthquakes, and other natural disasters, but from the outside it seems like many parts of the USA could benefit from moving closer to this Japanese relationship with homes.

Why do we even need a server? Why can’t I pull this directly off the disk drive? That way if the computer is healthy enough, it can run our application at all, we don’t have dependencies that can fail and cause us to fail, and I looked around and there were no SQL database engines that would do that, and one of the guys I was working with says, “Richard, why don’t you just write one?” “Okay, I’ll give it a try.” I didn’t do that right away, but later on, it was a funding hiatus. This was back in 2000, and if I recall correctly, Newt Gingrich and Bill Clinton were having a fight of some sort, so all government contracts got shut down, so I was out of work for a few months, and I thought, “Well, I’ll just write that database engine now.”

Gee, thanks Newt Gingrich and Bill Clinton?! Government shutdown leads to actual production of value for everyone instead of just making a better military vessel.

🎶 Just a pair of Hitler fanboys, preparing to enter the white house 🎶

I can’t believe it just clicked for me that we shouldn’t be watching out for the “next Hitler” (which I was getting ready to assign to trump based on the past 9 years) but a group of fetishizing copycats.

Reminds me a bit of how Robert E. Lee and much of the confederacy saw themselves as real-life Misérables (https://boundarystones.weta.org/2019/05/13/how-les-miserables-became-lees-miserables).

The common point between Lee, Hitler, and the Misérables is they were all lost causes in the end (thankfully). Hopefully today’s regressive shit-stains-of-a-human-being will go the same way.

Ooooh, that’s a good first test / “sanity check” !

May I ask what you are using as a summarizer? I’ve played around with locally running models from huggingface, but never did any tuning nor straight-up training “from scratch”. My (paltry) experience with the HF models is that they’re incapable of staying confined to the given context.

I’m not sure if this is how @hersh@literature.cafe is using it, but I could totally see myself using an LLM to check my own understanding like the following:

- Read a chapter

- Read the LLM’s summary of the chapter

- Make sure I can understand and agree or disagree with each part of the LLM’s summary.

Ironically, this exercise works better if the LLM “hallucinates”; noticing a hallucination in its summary is a decent metric for my own understanding of the chapter.

It just takes a little effort to filter to see and reach the right people’s content. Otherwise, I don’t think completely withdrawing would be very beneficial in my industry and the era I live in.

I have been thinking about this a lot. Wrestling with how much consumption I can allow myself to sustain, and how much I can allow myself to abstain from.

As more and more of the world around me is interfaced with through machines and/or the internet, I can’t just “take a break from computers” for a few days to give my brain a break from that environment anymore. From knowledge to culture, so much is being shared and transferred digitally today. I agree with the author that we can’t just ignore what’s going on in the digital spaces that we frequent, but many of these spaces are built to get you to consume. Just as one must go into the hotbox to meet the heaviest weed smokers, one shouldn’t stay in the hotbox taking notes for too long at once because of the dense ambient smoke. Besides, how do you find the stuff worth paying attention to without wading through the slop and bait? The web has become an adversarial ecosystem, so we must adapt our behavior and expectations to continue benefiting from its best while staying as safe as possible from its worst.

Some are talking about “dark forest”, and while I agree I think a more apt metaphor is that of small rural villages vs urban megalopolises. The internet started out so small that everyone knew where everyone else lived, and everyone depended on everyone else too much to ever think of aggressively exploiting anyone. Nowadays the safe gated communities speak in hushed tones of the less savory neighborhoods where you can lose your wallet in a moment of inattention, while they spend their days in the supermarkets and hyper-malls owned by their landlords.

The setup for Wall-E might take place decades or centuries from now, but it feels like it’s already happened to the web. And that movie doesn’t even know how the humans manage to rebuild earth and their society, it just implies that they succeed through the ending credits murals.

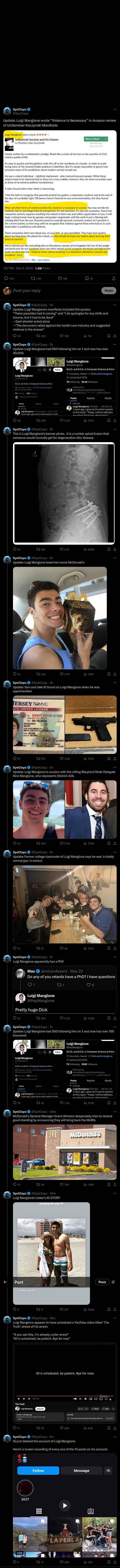

A tweet thread I found:

link to first tweet: https://x.com/SyeClops/status/1866189782419984714

Not the most clear lineup with “left” or “right”, but this person is clearly a product of our times.

Math underlies programming in a similar fashion to how physics underlies automobile driving. You don’t ever need to know about newton’s laws of motion to pass your driver’s license and never get a ticket until you die. At the same time, I will readily claim that any driver that doesn’t improve after learning about newton’s laws of motion had already internalized those laws through experience.

Math will help your intuition with how to tackle problems in programming. From finding a solution to anticipating how different constraints (notably time and memory) will affect which solutions are available to you, experience working on math problems - especially across different domains in math - will grease the wheels of your programmer mind.

Math on its own will probably not be enough (many great mathematicians are quite unskilled at programming). Just as driving a car is about much more than just the physics involved, there is a lot more to programming than just the math.

I wonder what other applications this might have outside of machine learning. I don’t know if, for example, intensive 3d games absolutely need 16bit floats (or larger), or if it would make sense to try using this “additive implementation” for their floating point multiplicative as well. Modern desktop gaming PCs can easily slurp up to 800W.

From what I understand, you could un ironically do this with a file system using BTRFS. You’d maybe need a

udevrule to automate tracking when the “Power Ctrl+Z” gets plugged in.